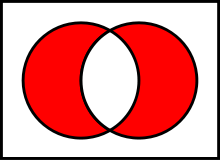

The Logical XOR

Exclusive disjunction or exclusive or is a logical operation that outputs true whenever both inputs differ (one is true, the other is false). It is symbolized by the prefix operator J and by the infix operators XOR, EOR, EXOR, and ⊕. It is commonly used to ensure an unbiased mean output from physical random number generators, and it is used for all devices in the GCP network.

The effect and implication of using an XOR to eliminate 1st order bias deserves comment. For example, Jeff Scargle, a skeptical correspondent, believes we are throwing out the baby

with this procedure.

I asked York Dobyns to give a technically robust answer to Jeff’s questions and concerns.

Jeff Scargle, Monday 18 Apr 2005:

As you may know, one of things I consider oddest and most important is the

XORbusiness. I want to be sure I fully understand the process.There appears to be only the following on the web site:

A logical XOR of the raw bit-stream with a fixed pattern of bits with exactly 0.5 probability compensates mean biases of the regs. The Pear and Orion regs use a

01bitmask and the Mindsong uses a 560-bit mask (the Mindsong mask is the string of all possible 8-bit combinations of 40’s and 41’s. Analysis confirms that the XOR’d data has very good, stable means. XORing does more than correct mean bias. For example, XORing a binomial[N,p] will transform it to a binomial[N, 0.5], so the variance is transformed as well.

I find this explanation terse and confusing. ...fixed pattern... and yet it is random (that is the key).

York replies:

The goal in the RNG design is to have the output be a well-conditioned stream of random bits; ideally the bits should be independent and identically distributed with p=0.5.

However, Roger’s website as quoted above errs on a couple of points (sorry, Roger). First a minor point: while I might be mistaken, I believe that Orions work by running *two* physical noise sources and XORing them against each other, not by XORing a physical noise source against a deterministic template.

More important error: The xor process does *not* transform a binomial[N,p] into binomial[N,0.5]; the transformed string is forced to p=0.5 but can depart grossly from the binomial distribution. The reason is that an XOR with 50% 1’s actually does is map the B[N,P] binomial function onto B[N/2,P] + B[N/2,1-P]. The expectation therefore becomes

(N/2 * P + N/2 * (1-P)) = N/2 * (P + 1 - P) = N/2,

independent of the raw P, as desired. The variance, on the other hand, becomes

N/2 * P * (1-P) + N/2 * (1-P) * P = N*P*(1-P)

— in other words, the variance is *unchanged* from that of the original binomial. The variance of B[N,0.5], on the other hand, is N*.5*.5 = N/4; the variance of the xor’ed result will be smaller for any P != 0.5.Finally, in terms of understanding the details of the process: the PEAR REG effectively XOR’s the raw bit stream with the sequence 10101010... This has the effect of inverting every other bit. The Mindsong device replaces the rigid alternation with a 560-bit sequence constructed from taking the 70 different bytes with 4 1’s and 4 0’s, and arranging them in an order that minimizes byte-to-byte correlations. The fact that each byte contains equal numbers of byte-to-byte correlations. The fact that each byte contains equal numbers of 1’s and 0’s imposes a bit-to-bit autocorrelation of about -0.14 at lag 1; while not perfect this is a large improvement over the autocorrelation of -1 in the PEAR template. In any case, the autocorrelation in the template is relevant only if the original bits are autocorrelated.

As to the motivation for using the XOR: As Roger noted, most simple hardware random sources are vulnerable to many environmental confounds. Temperature, for instance, is likely to cause a change in the absolute value of a DC reference voltage, so that any device that uses that kind of comparison will have a temperature-dependent bias. The template XOR is an easy-to-implement approach that forces the probability of the output bits to 0.5, even though it doesn’t fix higher-level statistical imperfections.

Jeff Scargle:

Also, I feel this process rejects the kind of signal that most people probably think is being searched for ... namely consciousness affecting the RNGs ... but because of the XOR, you are only sensitive to consciousness affecting the final data stream. We discussed this before, but I still find it amazing that the entire operation has thrown out at least this baby along with the bathwater.

York Dobyns:

Consciousness affecting the RNGsis exactly what the current setup looks at. The template XOR is *part of* the RNG, built right into the hardware as an integral component. I think you may meanconsciousness affecting the analog noise source,which is a somewhat different hypothesis. There is an empirical expectation in experiments of this class, dating back to the results of experiments by Helmut Schmidt decades ago, that the details of how an RNG is built do not matter; it can be considered ablack boxthat spits out random numbers, and the effect of consciousness is to change the distribution of those numbers. Therefore, one is free to encumber the intermediate processing between raw analog noise and the final bitstring presented to the consciousness by as many steps as may be required to produce well-conditioned random bits: the consciousness effect, at least according to Schmidt, appears to be teleologically directed at final outcomes, not mechanistically directed at steps in the process.Subsequent investigation of this thesis has produced mixed results but suggests that there is at least a substantial equivalence class of RNGs for which it is true. Beyond that I can only restate Roger’s point: the GCP is an elaboration and generalization of experiments with field-portable RNGs; those experiments showed large positive effects which were the motivation that made the GCP seem worthwhile; all of the sources used for those experiments incorporated XOR templates (and would have been unacceptably vulnerable to environmental insults, *especially* for a field-portable unit used in unpredictable circumstances, without them).

Further Comments, Peter Bancel

York is right about the variance. It was the same conclusion I came to (and may have miscommunicated to Roger). I looked at it because we generally use variance-type measures and I was worried that the xor could effect variance deviations of the network output. Checking the Xor effect on a constant bias was a very simple stab at the problem.

More or less reassured, I dropped it and went on to other pressing things. HOWEVER, this is one of the issues that eventually needs to be fleshed out. It has been swept under the carpet due to time contraints.

Generally, for a constant probability bias p!= 1/2, Xor’ing gives odd moments ( like the mean and skewness) =0, as one would get from an unbiased p = 1/2. Even moments 2 and 4 are consistent with the biased prob. So after Xor’ing, the variance and kurtosis reflect the biased binomial distribution and odd moments the unbiased. [The even moments higher than 4 are more complicated still.] So the overall distribution is complicated.

Some observations:

- I agree with York’s response to JS. One could add that the field-reg experiments, which motivated the project, looked at variance-type measures, as does GCP. The Xor is not important for the stats we look at since it

passeslow-order even fluctuations. [Personally, I’d like to be more precise about this... needs some work].- The Xor does

fixhigher order statistical imperfections of one type: odd moments skewed by constant mean drift arecorrected.They are what would obtain under zero probability bias of the binomial output. I suppose this is only meaningful in the limit of large N metastable bias shifts.- The net positive bias of the mindsong regs implies that the raw output of those devices can’t be modeled by a random output with drifting bias, since that would imply a net negative variance.

- Since we know the regs do in fact have mean bias drifts, we should expect the variance of the network to be biased negatively, no? (barring other factors). There really should be a model of the network output that allows us to quantify this. If there were a grad student working on this, that would be the kind of thing that could go into a proper network calibration.